Mind your Logs : How a build log from a Jenkins leaked everything

How much can an inconspicuous Jenkins log leak ? — Everything

One fine day amongst a deluge of articles that we share on our slack channels, Avinash posted an article on leaklooker. We were working on making our perimeter more secure and thus this popped up.

This blog was published with the approval from the company and the sole purpose is to spread awareness and share the technical learnings.

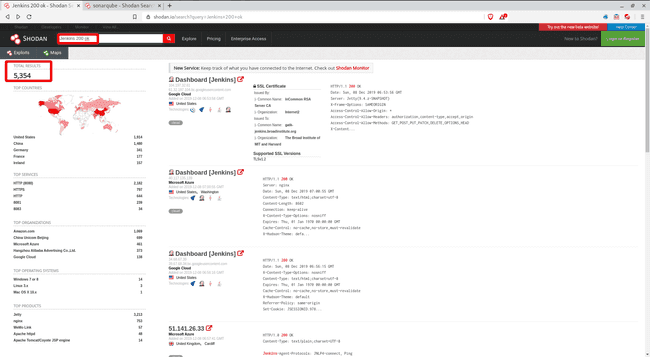

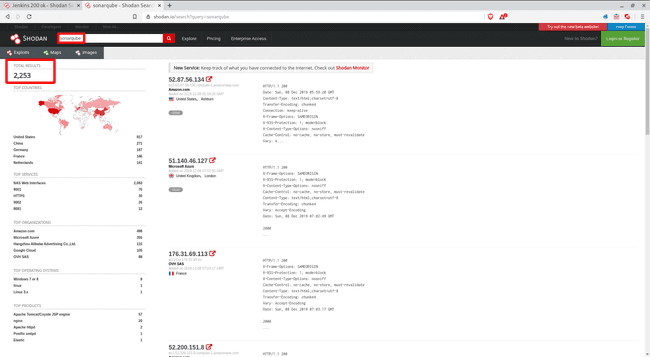

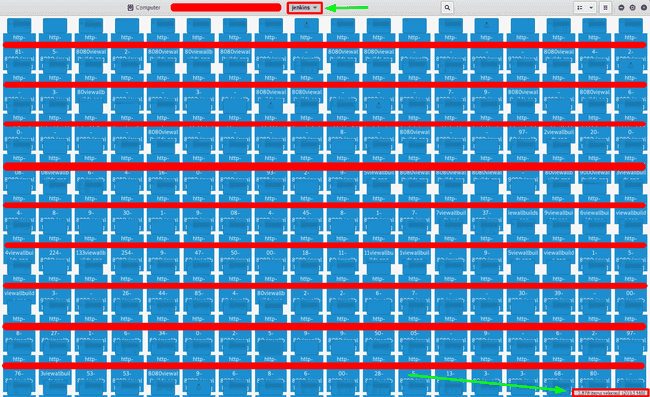

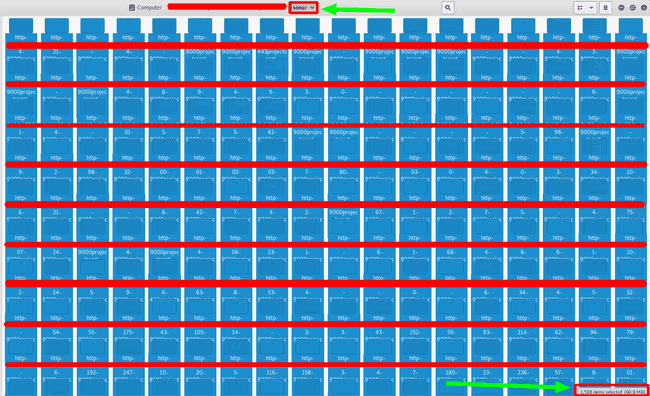

As I was reading the article, I found the author mentioned some of the dorks for Jenkins and Sonarqube. Since I had my first encounter with Jenkins and Sonarqube quite recently, so these dorks looked quite familiar to me. I started with some manual queries on Shodan. Now, there were more than 5000 results on Shodan for Jenkins alone and another 2000 for Sonarqube. I checked a few results manually and then went up with what I do best, automate the work ( let the machines do what they do best :P ).

Jenkins alone has over 5000 Records

Sonarqube has another 2000 results

Now, I had almost 5000 URLs and it wasn’t at all possible for me to go to each Jenkins dashboard to check as to whether it had anything leaking ( as I wasn’t sure what could be leaking and where ). I checked manually a few URLs so as to figure out what I could automate here. There wasn’t anything consistent which I could latch on to make a script out of it but yes if I could look at the page, the names and some other info on the page, I could figure out if it was worth the time.

Here comes the next leg of automation, ‘screenshot them all’. I had some prior experience with this method where I had used chrome_shots ( with some changes ) to screenshot large amount of pages. Now, with ~2000 screenshots I started looking at them, checked a few and then more.

( There are lesser ‘number of screenshots’ because the above two screenshots are of recent search whereas, this automation tool ran about 5 months back )

Jenkins ( Almost 3000 screenshots )

Sonarqube ( almost 1600 screenshots )

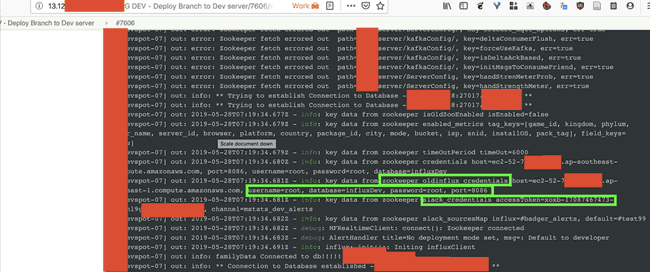

Eventually I landed upon an instance which was building dev builds for the ‘one who must not be named’ company ( hereafter referred as ‘redacted.com’ ). With the sliver of hope I started looking at it’s build and the console logs, although Jenkins masks most of the secrets but if everything worked perfectly why would their be any ‘bugs’ at all. Then I found a slack token and a public influx db server ‘root’ credentials in those logs :

Slack and Influx DB Creds leaking from zookeeper’s logs

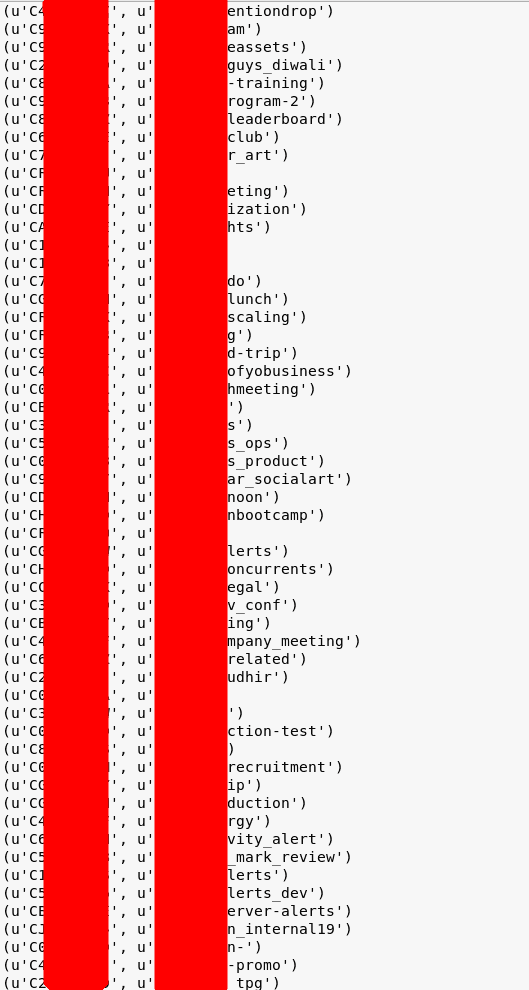

After I got these credentials I went on to verify if they are working and are not just test credentials or with very limited access. So, I tried fetching the channel lists and users from the slack directory and it was a success. There were about 1000 channels and 200 employees. Then I pulled up the people in their slack group and also the conversation in a few channels ( like the one mentioned in the console log above, mstatsdevalerts ) using slack APIs. After I got these I was pretty sure this was something important and should be reported.

Channel List

redacted.com didn’t have a public bug bounty page so I wasn’t sure as to how to reach the person responsible and also my prior experience with Indian companies hadn’t been very good ( once my CSRF report was redirected to the customer care which went on asking me

“Sir, what problem are you facing with this ?”

That was from a reputable Indian travel booking portal ) . This is where Avinash Jain (@logicbomb_1) again helped me ( he has quite an experience with the Indian companies, kinda an expert on the Indian bug bounty scene ). I drafted a mail and sent it to the relevant contacts who can understand it.

Meanwhile I started gauging the depth of this exploit. As you can see from the partial screenshot, above there were quite a few ‘interesting’ channels, that could contain sensitive info. I pulled a few messages from some of these channels and it did contain aws credentials sent by a bot to the user on their slack channel and other sensitive info. People were sharing the ‘wifi password’ on the ‘#general’ channel ! That was really silly.

Using some of these credentials I could list their buckets and even read/write to these buckets, which contained their server as well as client application’s codebase.

Partial Output of : aws s3api list-buckets — query Buckets[].Name![Partial Output of : aws s3api list-buckets — query Buckets[].Name Partial Output of : aws s3api list-buckets — query Buckets[].Name](/static/4329203905d4d74ae1793d408874b27d/494f9/aws_s3api_list-buckets.png)

Key Takeaways

- Automate them all : Let machines take over ( The mundane tasks only ). While I had automated the screenshot part, I was also checking for RCE on Jenkins on these instances ( i.e. Jenkins instances with open Script Console and I did get quite a few )

- Don’t presume anything: Now, usually Jenkins replaces secrets with asterisks but it can’t mask the tool output and as in this case the zookeeper was leaking the credentials.

- No secret sauce : Bugs are simple, persistence is the key.

Thank you everyone :)

I tweet (mostly) about security stuff on Twitter : @aseemshrey